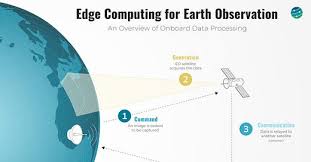

Abstract. As Earth-observing sensors proliferate, scientific instruments grow more capable, and constellations generate terabytes per day, satellite operators face a simple problem: bandwidth to the ground and latency for decision loops are limited. Edge computing in space — placing compute, storage and AI inference close to sensors on orbiting platforms — solves this by turning satellites into real-time data processors, decision nodes and service platforms. This detailed article explains why on-orbit edge matters, the architectures and hardware that enable it, networking and software patterns, representative use cases, economic and regulatory implications, technical challenges and a practical roadmap for moving from today’s demonstrations to operational, resilient space edge infrastructure.

Key short takeaways:

- On-orbit edge reduces downlink cost and latency, enabling real-time applications (disaster response, ISR, autonomous rendezvous, industrial monitoring).

- Technology is already flying: HPE’s Spaceborne Computer-2 on the ISS, cloud vendors’ satellite compute experiments, and multiple demonstration payloads show viability. HPCwireAmazon Web Services, Inc.

- Architectures range from modest inference boxes in smallsats to planned megaconstellations of in-space AI supercomputers — each with distinct tradeoffs in power, thermal control, fault tolerance and software. Live ScienceOrbitsEdge

1. Why put compute into space? the case for the space edge

Satellites and space sensors are evolving along three axes simultaneously: higher resolution (optical, SAR, hyperspectral), higher revisit cadence (constellations and ride-share launches) and richer payloads (multisensor suites and on-board AI). That creates three operational pressures:

- Bandwidth and cost pressure. Raw downlink capacity is expensive and finite. Sending every raw frame or waveform to the ground wastes bandwidth and imposes storage and processing costs on ground systems.

- Latency and timeliness. Many applications depend on decisions made in minutes to seconds (disaster monitoring, maritime anomaly detection, tactical ISR). Placing analytics on the satellite removes the round-trip to ground for initial triage and can reduce time-to-action from minutes/hours to seconds.

- Autonomy and scale. Future on-orbit operations — autonomous proximity maneuvers, coordinated multi-satellite sensing, in-space logistics — require local decision loops and resilient processing that cannot rely on continuous ground control.

Edge computing in space directly addresses these pressures by: (a) filtering and compressing data in orbit so only high-value products are downlinked, (b) running near-real-time inference and decisioning where latency matters, and (c) enabling distributed applications that span constellations, human teams and mission control. These benefits are already being validated with in-space experiments and commercial demonstrations, including HPE’s Spaceborne Computer-2 on the ISS and cloud vendor experiments integrating compute functions into orbiting platforms. HPCwireAmazon Web Services, Inc.

2. Core capabilities of a space edge node

A space edge node is more than a computer in orbit. An operational node combines multiple capabilities:

- Compute: CPUs, GPUs/TPUs or specialized AI accelerators sized to mission (from low-power inference cores for smallsats to multi-rack machines for orbital data centers).

- Storage: Persistent flash or SSD pools for buffering raw data, checkpointing models and temporary product repositories. Storage must be radiation-tolerant and support degradation-aware file systems.

- Networking: RF communications (X/Ku/Ka/L-band or optical intersatellite links), secure ground links (S-band/Ka), and mesh protocols for constellation coordination. High-speed crosslinks enable distributed processing across satellites.

- Sensor interface & real-time I/O: Low-latency data buses connected to payloads (e.g., PCIe/SPACEFibre/SpaceWire adapters) for direct ingestion of sensor streams.

- Software & orchestration: Containerization, orchestration frameworks (container schedulers adapted for intermittent links), AI model management, telemetry and remote update mechanisms.

- Resilience & safety: Watchdogs, hardware redundancy, fault containment and safe-mode capabilities to avoid cascading failures and interference with avionics.

- Power & thermal management: Solar array energy budgets, battery buffering, and thermal control (radiators, heat pipes) sized to the power dissipated by compute.

The tight coupling of these subsystems (particularly power/thermal vs compute) defines a node’s class. For example, a cubesat inference module might be a low-power Arm SOC + TPU running wake-on-event detectors, while an ISS or free-flying data center can host dozens of CPUs and accelerators with active thermal systems. HPE’s SBC-2 is an example of a high-capability experimental node operating on the ISS, demonstrating research and application workloads in a space environment. HPCwire

3. Architectures and deployment models

There are several practical deployment models for edge computing in space — each matches different payload, budget and mission constraints.

3.1 Smallsat inference modules (sensor-proximal)

- Use: immediate triage onboard small EO cubesats and microsats.

- Design: very low power (a few watts to tens of watts), specialized accelerators (e.g., low-power NPUs), small SSD buffer.

- Benefits: cheapest path to operational edge; per-satellite autonomy for filtering and event detection.

- Limitations: limited model complexity and limited persistent storage.

3.2 Hosted payload compute (hosted on larger spacecraft)

- Use: a larger satellite hosts a general-purpose compute payload that services multiple instruments or tenant payloads.

- Design: more compute and storage, higher power & cooling budget, opportunities for multi-tenant virtualization and secure isolation.

- Benefits: economies of scale and flexible mission provisioning (multiple missions share a data center in orbit).

- Limitations: scheduling/tenancy management and failure impact on host bus.

3.3 Edge constellations with distributed orchestration

- Use: constellations that share processing across nodes, offloading heavy jobs to nearby satellites via high-rate inter-satellite links (ISLs).

- Design: moderate compute per node, high-bandwidth optical or RF crosslinks, distributed storage and compute orchestration.

- Benefits: scale through federation — heavy workloads can be split across the constellation, with near-real-time collaborative inference.

- Limitations: complexity in synchronization, consensus, and link availability.

3.4 Orbital data centers & space data centers

- Use: full data-center class machines in orbit (for backhauling large datasets, training large models, or providing on-demand HPC).

- Design: racks of servers, active thermal control, large solar arrays and batteries; may use space cooling advantages.

- Benefits: enormous compute near sensors and other orbit assets; could reduce overall Earth-to-space data movement. Recent news indicates ambitions to build large constellations focused on in-space AI compute. Live Science

- Limitations: high capital cost, launch risk, political/regulatory questions (where is jurisdiction located?), and strong engineering demands.

Real deployments will mix these models: smallsats for frontline filtering, hosted compute for aggregated processing, and a few orbital data centers for heavy jobs.

4. Networking and latency: how the space edge shortens decision loops

The advantages of edge come from two networking realities:

- Reduced round-trip time (RTT). A single hop from sensor → on-sat compute → action avoids the satellite→ground→cloud→ground path which can be dominated by ground station scheduling and processing queueing. Even with a good ground chain, TT&C and cloud queues introduce tens of seconds to many minutes of latency — sometimes far longer for busy ground infrastructures. An onboard decision can reduce that to seconds or sub-second for local loops (e.g., payload-triggered retargeting or crosslink-shared action).

- Bandwidth economy. Rather than transferring every GB of raw data, satellites can downlink only compressed products, metadata, events, or regions of interest (ROI), saving both cost and time.

Example: a simple latency comparison

- Conventional path: sensor captures image → bulk transfer to ground via scheduled pass (minutes to tens of minutes of wait) → ground ingest and analysis (minutes) → tasking decision (minutes) → uplink command (minutes). Total latency easily 10s of minutes to hours depending on ground coverage and queues.

- Edge path: sensor captures image → on-board inference flags event and extracts ROI → downlink small ROI + notification → ground confirms and tasking completes (optionally). End-to-end latency can be seconds to a few minutes.

Edge also enables local closed loops — e.g., a satellite detects a collision risk and autonomously performs an avoidance maneuver based on on-board trajectories and policies, with only an immediate post-fact report to ground. This capability is especially important for dense LEO regions and responsive military/tactical operations.

Crosslink latency and throughput are central to distributed architectures. Optical ISLs at tens of Gbps and RF crosslinks at lower rates let satellites form distributed processing fabrics where heavy computation migrates to nodes with spare capacity or better power/thermal headroom.

5. Software stacks and orchestration patterns

Space edge software must marry cloud concepts (containers, orchestration, CI/CD) with the constraints of intermittent connectivity, radiation effects and platform heterogeneity.

5.1 Containerization and virtualization

Containers (e.g., Docker, OCI) provide application portability. On orbit, lean runtimes (e.g., lightweight container engines or unikernels) are preferable. Containers make it straightforward to push validated model images and application stacks to satellites during a contact window.

5.2 Scheduling and orchestration

Conventional orchestrators (Kubernetes) assume high-availability networking and shared storage—assumptions that break in space. Space-aware schedulers must:

- manage intermittent links and opportunistic windows,

- respect energy budgets (schedule compute for orbit sunlit periods),

- handle graceful degradation and job preemption,

- support prioritized workloads (critical ISR over low-priority analytics).

Prototypes and vendor solutions adopt either stripped down orchestrators or custom schedulers that accept policy constraints from mission control and autonomously optimize locally.

5.3 Model lifecycle and federated learning

AI models deployed in orbit require lifecycle management: model distribution, A/B testing, rollbacks and retraining. Federated learning architectures can let satellites jointly update a shared model by exchanging gradients or compressed updates over ISLs, limiting raw data transfer and protecting sensitive data sources. Update validation and consensus are critical — a corrupt model push could degrade many nodes.

5.4 Observability, debugging and telemetry

On-orbit observability is challenging: telemetry is scarce and costly. Effective observability frameworks compress and prioritize health metrics, use anomaly detection to flag issues, and provide safe remote debugging (e.g., sandboxed testbeds on the satellite for validation).

5.5 Security & software supply chain

Software updates to space systems are high-risk. Signed update chains, reproducible builds, attestation of hardware and software (TPM or equivalent), and thorough test harnesses are essential. Patch windows must balance safety and agility.

Cloud vendors are already building space-specific SDKs and frameworks to help operators deploy “virtual missions” or edge workloads in orbit. Microsoft Azure, for example, has published tooling aimed at enabling satellite operators to run AI applications at the edge of space. TECHCOMMUNITY.MICROSOFT.COM

6. Hardware building blocks and space-qualification

Designing hardware for edge nodes in space tightly couples the compute choice to platform constraints:

6.1 Compute platforms

- Low-power SOCs (ARM, RISC-V): used on cubesats for inference and control tasks; low thermal load and modest SWaP (size, weight, and power).

- Specialized NPUs/TPUs and FPGAs: offer high inference performance per watt and can be more tolerant to single-event effects when designed appropriately. Radiation-hard or radiation-tolerant variants are preferred for long missions.

- Commercial server CPUs/GPUs: used in hosted payloads and ISS experiments (e.g., HPE’s Spaceborne Computer family uses commercial-off-the-shelf servers with engineering to handle space environment). HPCwire

6.2 Storage & memory

Radiation-tolerant or error-correcting flash/SSD arrays, with filesystems and erasure coding that anticipate sector loss and bit flips.

6.3 Thermal & power systems

Compute dissipates heat; in vacuum, radiator sizing, conductive paths and careful placement are essential. Power budgets dictate duty cycles for heavy compute; mission planners often schedule training or batch processing during peak sunlight.

6.4 Reliability & fault tolerance

Hardware redundancy, graceful degradation (ability to continue offering reduced capability if some components fail), and software checkpointing are essential to long missions.

Manufacturers and operators often balance two approaches: (a) use COTS parts with software/hardware mitigation to reduce cost and accelerate development, or (b) use space-qualified parts for long life and radiation tolerance. HPE’s SBC-2 and recent hosted payload experiments illustrate the COTS-plus-engineering route, enabling powerful capabilities onboard the ISS for research workloads. HPCwire

7. Representative use cases

Edge computing in space unlocks and improves many mission types. Below are high-impact examples.

7.1 Rapid disaster monitoring & response

Satellites can detect floods, fires or storm damage and run on-board analytics to deliver immediate “actionable products” (damage maps, hotspot detections). First responders receive near-real-time alerts instead of waiting for full imagery download and ground processing.

7.2 Maritime domain awareness & anomaly detection

Edge-enabled satellites can scan shipping lanes, identify suspicious behavior (loitering, AIS spoofing), and alert authorities quickly, avoiding the need to transfer petabytes of SAR data to the ground.

7.3 Tactical ISR and time-sensitive targeting

Military and coalition operations benefit from low-latency, on-platform inference for identification and cueing. On-orbit edge can support local data fusion, geolocation and rapid targeting decisioning within mission policies and ROEs.

7.4 Space situational awareness (SSA) & collision avoidance

Satellites can perform on-board optical tracking and debris detection, run local conjunction analysis and autonomously execute collision avoidance when time is too short for ground decision loops.

7.5 Scientific instrument pre-processing

High-throughput instruments (e.g., hyperspectral imagers, radio arrays) can pre-process raw streams for compression, RFI removal, or initial classification before sending compressed science products to Earth.

7.6 Autonomous rendezvous & robotics

On-board compute lets a servicing vehicle perform vision-based proximity ops and fine control during docking without continuous ground guidance, reducing latency and increasing autonomy for in-space servicing.

7.7 Commercial real-time services

Companies can provide near-real-time analytics (mineral detection, crop stress alerts, traffic monitoring) as hosted “virtual missions” on hosted compute platforms in orbit — enabling subscription models with lower data transfer costs.

Many of these use cases were anticipated by early demonstrations: HPE ran multiple experiments on the ISS to validate that COTS compute could run in space and accelerate time-to-insight for applications like genomics and image processing. Meanwhile, industry partnerships are building operational tools and SDKs to enable satellite operators to run AI workloads on orbit. Microsoft Azurehpe.com

8. Economics and business models

Edge in space creates several commercial pathways.

8.1 Data-reduction as a service

Operators charge per-GB saved (or per-event processed) by providing on-orbit analytics that only downlink high-value products. This is attractive to EO customers who otherwise pay for heavy ground processing and storage.

8.2 Virtual missions & compute tenancy

A hosted compute provider (or satellite operator) offers compute time on orbit like a cloud provider. Customers upload models and run inference or batch jobs during scheduled windows. Microsoft Azure and other vendors are creating SDKs and services that point in this direction. TECHCOMMUNITY.MICROSOFT.COM

8.3 Mission-assurance subscriptions

For critical assets (maritime monitoring or national security), customers pay for guaranteed low-latency detection services and prioritized downlink windows.

8.4 On-demand training and high-performance processing

Large players may bid for in-space training jobs or HPC bursts (if orbital data centers become viable), reducing ground-to-space transfer for massive datasets or specialized scientific needs.

Costs are driven by launch mass, platform build, and operations. Value accrues when savings in downlink, faster decisioning and new revenue streams exceed those costs. The growth of demonstration programs and vendor tooling suggests early markets (GEO life-extension, tactical ISR, disaster response) can provide anchor customers for the emerging space edge industry.

9. Security, sovereignty and regulation

Edge computing in space raises complex security and regulatory questions.

9.1 Cybersecurity and hardening

On-orbit compute is an attractive target. Best practices include:

- Signed and attested software updates,

- Hardware root of trust and tamper detection,

- Strong isolation between tenants,

- Encrypted data at rest and in transit,

- Intrusion detection adapted for low telemetry budgets.

9.2 Data sovereignty and export controls

Who owns processed outputs and where is processing legally situated? National regulations (export controls, data localization) and military concerns complicate multinational hosted compute. Regulators and operators must clarify policy frameworks for cross-border data processing in orbit.

9.3 Space traffic management and safety

Autonomous decision loops (e.g., automated collision avoidance) require transparent rulesets and logging to ensure accountability. Regulatory agencies and SSA providers must coordinate with operators deploying autonomous orbiting nodes.

9.4 Spectrum and crosslink regulation

High-throughput ISLs and optical links require spectrum management and licensing; operators must avoid interference and adhere to ITU/ national regulations. Rapid reconfiguration of beams and priorities implies that dynamic spectrum management tools will be helpful.

10. Case studies and live demonstrations

A set of demonstrations already validates key parts of the space edge picture.

10.1 HPE Spaceborne Computer-2 (ISS)

HPE’s Spaceborne Computer-2 — a rack-scale COTS server system — has run numerous experiments on the International Space Station, demonstrating that complex analytics (including genomics and image processing) can execute at the edge in space. These experiments shortened time-to-insight and validated operational concepts for hosted computing on a crewed platform. HPCwireISS National Lab

10.2 AWS satellite compute experiment

Amazon Web Services reported a milestone experiment demonstrating AWS compute and machine learning services running on an orbiting satellite — a major validation that cloud architectures and toolchains can be adapted to orbiting platforms for on-board processing. This highlights how major cloud vendors plan to support space edge use cases. Amazon Web Services, Inc.+1

10.3 Azure Space Virtual Missions and genomics test

Microsoft’s Azure Space teams have demonstrated genomics workflows and published tooling to enable satellite operators to deploy AI workloads on hosted in-space infrastructure, showing that complex pipelines (genomics assembly, large-file analysis) can be executed on the ISS in partnership with HPE. Microsoft AzureTECHCOMMUNITY.MICROSOFT.COM

10.4 Thales Alenia Space: IMAGIN-e payload

Thales Alenia Space announced IMAGIN-e, a demonstration payload for space edge computing aboard the ISS designed to accelerate EO insights by processing imagery in orbit — illustrating industry momentum across traditional aerospace primes. Thales Alenia Space

10.5 Emerging large-scale plans (e.g., China’s Three-Body Computing Constellation)

Recent press reports indicate that groups in China have launched the first tranche of a planned large constellation designed specifically to host AI models and high-performance compute in orbit — signaling that ambitions for in-space AI at scale are being pursued outside the west as well. These programs, if they scale, will materially change the balance of capability for on-orbit processing. Live ScienceThe Verge

Together these demonstrations illuminate a viable trajectory: experimental validation on ISS and hosted payloads → commercial demos on LEO satellites → operational service offerings (virtual missions, hosted compute) → scaled constellations and orbital data centers.

11. Technical challenges and open research areas

Although progress is rapid, several technical and programmatic hurdles remain.

11.1 Power and thermal scaling

Compute density is power-hungry and produces heat. Novel thermal designs, dynamic duty cycling and power-aware scheduling are essential research targets.

11.2 Radiation effects on COTS components

COTS accelerators and storage must be hardened or coupled with software mitigation (error correction, redundancy). Research on radiation-tolerant NPUs and fault-tolerant system architectures remains vital.

11.3 Distributed consensus and orchestration under intermittent connectivity

Standard cloud orchestration presumes always-on connectivity. Space requires new distributed algorithms tolerant to partitions, asymmetric links, and power constraints.

11.4 Model validation, trust and provenance

Federated learning and in-space model updates necessitate provenance chains and validation to prevent model poisoning and to ensure models behave within safety envelopes.

11.5 Economic and regulatory frameworks

Business models depend on standards for tenancy, liability allocation and cross-border regulations. Policy research to define norms for in-space hosted compute and data sovereignty is urgent.

11.6 Sustainability and orbital environment

Increased in-space infrastructure raises concerns about orbital debris, spectrum congestion and environmental stewardship. Design-for-demise, minimal debris risk and shared SSA services will be key to responsible scaling.

12. Roadmap: how to go from demos to an operational space edge ecosystem

A practical staged roadmap accelerates deployment while managing risk.

Phase 0 — Validation and standards (now–2 years)

- Expand ISS and hosted payload demos (compute+AI).

- Convene industry–government working groups for APIs, telemetry standards, and security baselines.

- Pilot business models with anchor customers (disaster agencies, defense, agritech). HPCwireThales Alenia Space

Phase 1 — Early operational services (2–5 years)

- Smallsat inference fleets and hosted compute nodes go commercial.

- Cloud vendors offer “virtual missions” and edge SDKs enabling customers to deploy inference containers. TECHCOMMUNITY.MICROSOFT.COMAmazon Web Services, Inc.

Phase 2 — Distributed constellations and crosslink fabrics (5–8 years)

- Optical ISLs and mesh networking become common; distributed orchestration systems manage workload migrations and federation.

- Regulatory frameworks for tenancy, data sovereignty and liability begin to solidify.

Phase 3 — Scale and orbital data centers (8–15 years)

- Orbital data centers and large compute constellations (if economically viable and regulated) provide HPC, training and hosted services at scale. Cross-border collaboration and safeguards enable broader adoption. Live Science

At each phase, public–private partnerships, clear regulation and early anchor customers will accelerate progress while keeping safety and sustainability front and center.

13. Conclusion — compute where it matters

Edge computing in space is not a marginal novelty: it’s a practical response to the exponential growth in sensing capability and the pressing need for timely, actionable intelligence. The path from research prototypes (HPE’s SBC-2, cloud vendor experiments, hosted payloads) to commercial services is visible; we are in the transition from “can we do this?” to “how do we do this at scale and safely?” HPCwireAmazon Web Services, Inc.

The winners will be organizations that combine robust engineering (power/thermal/radiation-aware hardware), software sophistication (orchestration, secure updates and federated learning), and operational discipline (spectrum coordination, safety and regulatory compliance). The result will be faster disaster response, more economical Earth observation, resilient space operations and entirely new services that rely on real-time processing off-Earth.

If you want, I can now:

- produce a 10-slide executive briefing summarizing this article,

- draft a technical specification for a cubesat inference node (hardware bill of materials + thermal/power budget), or

- build a worked example link budget and latency comparison for a sample EO mission showing cost savings from on-orbit data reduction.

Which follow-up would you prefer?